Seveso, Bhopal, Three Mile Island and Chernobyl were not merely catastrophic events; they were also instrumental in revolutionising our understanding of safety and reliability in complex systems.

First, in 1976, a chemical plant in Seveso, Italy, inadvertently released a toxic cloud of dioxin, leading to widespread environmental contamination and health hazards. This incident underscored the need for stringent safety measures in chemical manufacturing and environmental protection laws.

To date, the most serious commercial nuclear accident that occurred on American soil was the Three Mile Island accident in 1979 (an event also made famous by a recent Netflix series), which involved the partial meltdown of a reactor at a nuclear power plant in Pennsylvania. It exposed the vulnerabilities in nuclear power plant design and operational protocols, prompting a re-evaluation of nuclear safety and emergency procedures.

In 1984, what is considered by most as the largest industrial accident ever recorded, occurred in Bhopal, India. A gas leak at a pesticide plant released methyl isocyanate, resulting in thousands of deaths and long term health issues. This tragedy highlighted the critical need for robust safety systems and emergency response strategies in the chemical industry.

Finally, probably the most notorious on this list, is the Chernobyl disaster in 1986. The explosion and subsequent meltdown at the Chernobyl nuclear plant in Ukraine represented the most severe nuclear disaster in history. It demonstrated the catastrophic potential of nuclear energy when safety systems fail, leading to significant reforms in global nuclear safety standards.

In dramatic circumstances, the four events built further momentum to work on expanding our understanding of how large-scale accidents unfold, what their root causes are, and what measures can be taken to mitigate their consequences.

In fact, significant progress in this area had been made well before the unfolding of those industrial disasters.

In 1978, a British author and journalist, Barry A. Turner, published, together with Nick Pidgeon, a book that would become a milestone in the study of industrial disasters and risk management in general. Man-Made Disasters (originally subtitled ‘The Failure of Foresight’) was one of the first publications to explicitly acknowledge the common, slow-burning build-up of unnoticed or unaddressed failures, leading to an industrial disaster. In his work, Turner emphasised the role of organisational and human factors, highlighting the importance of understanding organisational culture and communication in preventing disasters, or mitigating their consequences. Man-Made Disasters was also one of the first books to theorise the concept of ‘safety culture’ – an organisational culture in which a focus on safety permeates the shared beliefs, practices, and attitudes. It also lead to engagement with, and integration of, safety practices at all organisational levels – from operations to strategy.

Another milestone in the safety and reliability engineering literature, sociologist Charles Perrow’s Normal Accidents unpacked how complexity of modern organisations has the potential to lead to accidents that, despite common belief, are not unusual, and are, in fact, ‘normal’ in the grander scheme of things. Perrow’s work introduced the concepts of ‘tight coupling’ and ‘interactive complexity’ in complex systems. Tight coupling refers to systems where processes are closely linked in a sequence, with each step dependent on the preceding one, leaving little room for error or delay without affecting the entire system. Interactive complexity pertains to systems with numerous components and interconnections, where interactions can be unpredictable and unintended consequences can arise, making it difficult to foresee all potential failure modes. Perrow argued that in systems characterised by both tight coupling and interactive complexity, accidents are not just possible, but are also inevitable. Following in the footsteps of Turner, Perrow acknowledged that organisational practices and process – such as inadequate internal communication, suboptimal supervision and counterproductive culture – can become co-determinants in major accidents.

Turner and Perrow’s work led to a shift in focus from individual component failures to broader systemic issues, translating to the need for a holistic approach to safety that considers human, organisational, and technical factors in system design, operation, and maintenance.

GETTING OUR HOUSE IN ORDER: SOME MUCH NEEDED DEFINITIONS

Nancy Leveson, a Massachusetts Institute of Technology Professor, is one of the most eminent living researchers in the f i eld of safety and reliability engineering. In her seminal work Engineering a Safer World: Systems Thinking Applied to Safety, Leveson defi nes safety as a ‘freedom from accidents (loss events)’. In Leveson’s work, safety is therefore a systemic feature, one intrinsically determined by a system’s design. Designing for safety is about ensuring that a system or process operates without causing unacceptable risk of harm to people or the environment.

According to Patrick O’Connor and Andre Kleyner in Practical Reliability Engineering, reliability is ‘the probability that an item will perform a required function without failure under stated conditions for a stated period of time’. This definition underscores the importance of consistent performance and dependability in engineering systems.

While being distinct concepts, safety and reliability are deeply interconnected. A reliable system is often a prerequisite for a safe system, but a reliable system is not necessarily safe. For instance, a system can reliably perform a dangerous operation, which would not be considered safe. Conversely, a safe system’s reliability may be hampered by fail-safes that shut down the system under certain conditions to prevent harm.

So, what does this all have to do with cyber security?

Well, it all boils down to the essence of security itself: safety accidents happen in the absence of human intent; security events happen only when human intent is present.

To put it differently, from a safety engineering perspective, safety and security are simply two facets of the same token. Security merely adds the component of intentionality (cybercriminals’ intent). Yet, safety engineering would nonetheless focus on designing-out, or mitigating, the impact of adverse events, regardless of perpetrators’ intentions, capabilities, motives, etc.

CONTRASTING TRAJECTORIES: AVIATION SAFETY VERSUS CYBER SECURITY CHALLENGES

For simplicity, let’s examine an industry that deals with safety, security (and reliability) on a daily basis: aviation. The contrasting paths of aviation safety and cyber security off er a stark illustration of how different sectors can evolve in response to safety and security challenges. As a domain, aviation has the potential to be a ‘hotspot’ for safety and reliability engineering issues: using Perrow’s lessons, its operations tend to be interactively complex and systemic components (think of an airport) are tightly coupled. Nonetheless, aviation has achieved remarkable success in reducing accidents and enhancing safety.

Aviation’s journey since the 1950s demonstrates a commitment to safety, marked by technological advancements, regulatory oversight, a strong safety culture, and an emphasis on human factors and training. Innovations in aircraft design and systems, stringent regulations by bodies like the Federal Aviation Administration and the International Civil Aviation Organization (ICAO), and a culture that encourages learning and information sharing have been instrumental to this. The industry’s focus is on technical expertise, as well as on human factors – as exemplified by comprehensive crew training programs. As an example, ICAO’s Annex 6 to the Convention on International Civil Aviation states that ‘an operator shall establish and maintain a ground and flight training program, approved by the State of the Operator ... The training program shall ... include training in knowledge and skills related to human performance’ (ICAO Annex 6, Part 1, Chapter 9, Para 9.3.1). This, together with the use of more advanced f l ight simulators, has significantly reduced aviation accidents and fatalities across the years (Figure 1).

On the other hand, the cyber security world struggles with an ever-escalating trend in data breaches. According to research by IBM and the Ponemon Institute, the global average cost of data breaches increased by 2.3 per cent in 2023 as compared to 2022, landing at US$4.45 million (https://www.ibm.com/reports/data-breach).

Cyber security is a rapidly evolving field.

It is often outpaced by the technologies it seeks to protect and is complicated by a generic lack of unified regulatory frameworks, by the complexity and interconnectedness of digital systems, by a significant skills gap, and by an ever evolving threat landscape that contributes to the increasing frequency and severity of cyber incidents. Unlike aviation, where changes are more gradual and regulations are hyper-responsive, cyber security must contend with fast-paced threats.

ARE THERE COMMONALITIES IN THE ADVANCEMENTS THAT SAFETY AND RELIABILITY ENGINEERING AND CYBER SECURITY EXPERIENCED?

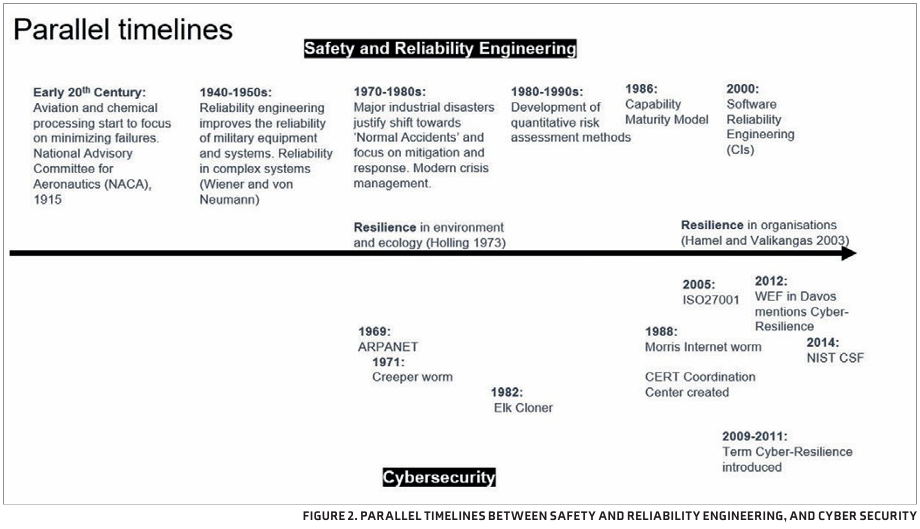

There certainly are. Generally speaking, safety and reliability engineering have crossed similar milestones to the cyber security world, but some decades in advance. An example of this is the concept of resilience. As we know in cyber security, cyber-resilience stems from the consideration that 100 per cent security is virtually impossible and resources need to be invested not just in prevention and preparation, but also in response and recovery. Now, it is difficult to identify when the concept of cyber-resilience was first used, but probably around 2009–11 is when it first started to get traction.

In the physical world – in ecology, in particular – resilience was a concept introduced in the 1970s to identify the capacity of an ecosystem to respond to disturbance and maintain functionality under duress.

Figure 2 represents the two parallel time lines of safety and reliability engineering, and cyber security. The commonalities, with a time lag, are undeniable.

WHAT ARE THE LESSONS FOR CYBER SECURITY?

Advancements in safety and reliability have allowed the aviation industry to dramatically reduce the number of losses (human or otherwise) across the decades. So, what lessons and principles can cyber security extract from safety and reliability engineering?

SYSTEMS THINKING APPROACH

A fundamental principle in safety engineering is the adoption of systems thinking. This approach involves understanding and addressing the cyber security challenges not just at the component level, but also as part of the entire system, including its interactions, dependencies, and the broader context in which it operates. Interactive complexity means that simply breaking down complex systems (e.g., infrastructure, processes, humans, etc.) and addressing them one by one would lead us to overlook unexpected dependencies. Systems thinking enables cyber security professionals to identify potential vulnerabilities and interdependencies that might not be apparent when focusing on individual elements. It encourages a holistic view, considering how different parts of a system can affect each other and the system’s overall behaviour, especially under attack.

CULTIVATING A SAFETY CULTURE

In safety engineering, a strong safety culture is paramount. This involves creating an environment where safety is prioritised, and every individual – from top management to operational staff – is encouraged to take responsibility for it. Translating this to cyber security means fostering a culture where security concerns – actual events as well as near misses – are openly discussed, and employees are encouraged to report potential vulnerabilities without fear of reprisal. It also involves regular training and awareness programs to keep all staff updated on the latest security practices and threats.

LEARNING FROM ACCIDENT MODELS

Safety engineering has developed various accident models, such as Leveson’s Systems-Theoretic Accident Model and Processes (STAMP), which provide frameworks for analysing accidents and near misses, both ex post and ex ante. Applying these models to cyber security can help in understanding the complex interactions within digital systems and the human factors that contribute to security breaches. These models emphasise the importance of looking beyond immediate causes, and examining the underlying system structures and processes that allow vulnerabilities to exist. In the United Kingdom, the National Cyber Security Centre has taken steps to implement STAMP based frameworks to cyber security.

EMPHASIS ON SOFTWARE AND AUTOMATION SAFETY

In safety-critical applications, the reliability and safety of software and automated systems are paramount. Cyber security can learn from this by adopting rigorous software engineering practices, including formal methods for software verification and validation. This ensures that software used in critical infrastructures and services is robust against both unintentional faults and deliberate cyber attacks.

PROACTIVE REGULATION AND STANDARDS

Safety engineering recognises the role of proactive regulations and standards in preventing disasters. STAMP, for example, models how external factors (e.g., socio-economic circumstances and regulations) have the potential to impact the system’s performance, including vulnerability to safety or security events. Cyber security can benefit from a similar approach, moving beyond compliance-based strategies to more proactive, system-oriented safety measures. This involves not only adhering to existing cyber security standards, but also actively participating in the development of new standards that address emerging threats and technologies.

CONTINUOUS LEARNING AND IMPROVEMENT

Preventing disasters in safety engineering is an ongoing process that involves continuous learning from past incidents or near misses. This principle can be effectively applied to cyber security, where it is crucial to conduct thorough investigations of security incidents, identify root causes, and implement corrective actions. Lessons learnt from past breaches should be used to inform future security strategies and improvements.

CONSIDERATION OF HUMAN FACTORS

Finally, the consideration of human factors is a critical aspect of safety engineering. This includes understanding how human operators interact with complex systems, and designing systems that support safe and effective human performance. In cyber security, this translates into designing user-friendly security systems and protocols that minimise the likelihood of human error, negligence or distraction, and training staff to minimise behaviours that could lead to organisational vulnerability to cyber attacks.

PRACTICAL STEPS FORWARD IN CYBER SECURITY

As we draw insights from the intersection of safety and reliability engineering with cyber security, it becomes clear that a proactive, systematic approach is essential for enhancing digital security. The lessons learnt from safety engineering can guide us in developing more robust and cyber-resilient organisations.

EMBRACING A HOLISTIC VIEW

Organisations should adopt a holistic view of cyber security, recognising that it is not just a technical issue, but is one that encompasses organisational culture, human factors, and operational practices. This means integrating cyber security considerations into all aspects of business operations and decision-making.

FOSTERING A CULTURE OF CONTINUOUS LEARNING

Just as safety engineering emphasises learning from past incidents, cyber security must also be rooted in continuous learning and adaptation. Organisations should establish mechanisms for regular training, incident and near-miss reviews, and knowledge sharing to stay ahead of emerging cyberthreats.

INVESTING IN RESILIENCE

Preparation for potential cyber incidents is crucial. This involves not only implementing robust security measures, but also developing comprehensive incident response and recovery plans. Regular drills and tabletop simulations can help to ensure that these plans are effective, and that staff at all organisational levels are prepared to respond to breaches.

LEVERAGING EXPERTISE

Organisations should recognise the value of expertise in cyber security, ensuring that decision-making in crisis situations is informed by the most knowledgeable individuals. This may involve investing in specialised training for staff or partnering with external experts to enhance internal capabilities.

ADOPTING ADVANCED RISK ASSESSMENT TOOLS

Utilising advanced risk assessment tools and methodologies from safety engineering, such as STAMP, can provide deeper insights into potential vulnerabilities and help in developing more effective mitigation strategies.

COLLABORATION AND STANDARDISATION

Finally, collaboration across industries and adherence to standardised security protocols are key. Sharing knowledge and best practices can help organisations learn from each other, while standardisation ensures a consistent and effective approach to managing cyber risks.

This article is taken from AISA's industry magazine secureGOV 2024

About the author:

Dr Ivano Bongiovanni is a researcher, consultant, author and speaker whose work focuses on the managerial and business implications of cyber security. A senior lecturer in information security, governance and leadership with The University of Queensland (UQ) Business School and a member of UQ Cyber, Bongiovanni helps business leaders and executives make evidence-based decisions in cyber security. With a professional background in risk and security management, Bongiovanni’s work bridges the gap between technical cyber security and its repercussions across organisations. He has advised ministers, policy-makers, board members, and senior executives on strategies, governance structures, policies, and training programs for effective cyber security management.